Feature detection is the process of extracting salient feature points from an image. The feature points could be blobs, corners or even edges. Feature detection finds numerous applications in image processing and computer vision such as visual localization and 3D reconstruction. A good feature detector must provide reliable interest points/keypoints that are scale-invariant, highly distinguishable, robust to noise and distortions, valid with high repeatability rate, well localized, of easy implementation and computationally fast. For instance, the intensity variation should not change the location of keypoints (Figure 1).

In this work, it is shown that robust and accurate keypoints exist in the specific scale-space domain. To this end, we first formulate the superimposition problem into a mathematical model and then derive a closed-form solution for multiscale analysis. The model is formulated via difference-of-Gaussian (DoG) kernels in the continuous scale-space domain, and it is proved that setting the scale-space pyramid’s blurring ratio and smoothness to 2 and 0.627, respectively, facilitates the detection of reliable keypoints. For the applicability of the proposed model to discrete images, we discretize it using the undecimated wavelet transform and the cubic spline function. Theoretically, the complexity of our method is less than 5% of that of the popular baseline Scale Invariant Feature Transform (SIFT). Extensive experimental results show the superiority of the proposed feature detector over the existing representative hand-crafted and learning-based techniques in accuracy and computational time.

| Feature Detector | Category | Platform | Run Time (ms) |

| SIFT | Multiscale | CPU | 552 |

| SURF | Multiscale | CPU | 159 |

| BRISK | Multiscale | CPU | 147 |

| HarrisZ | Multiscale | CPU | 2700 |

| KAZE | Multiscale | CPU | 1500 |

| AKAZE | Multiscale | CPU | 438 |

| DNet | Deep learning | GPU | 1300 |

| TILDE | Deep learning | CPU | 12100 |

| TCDET | Deep learning | GPU | 4100 |

| SuperPoint | Deep learning | GPU | 54 |

| D2Net | Deep learning | GPU | 950 |

| FFD (ours) | Multiscale | CPU | 29 |

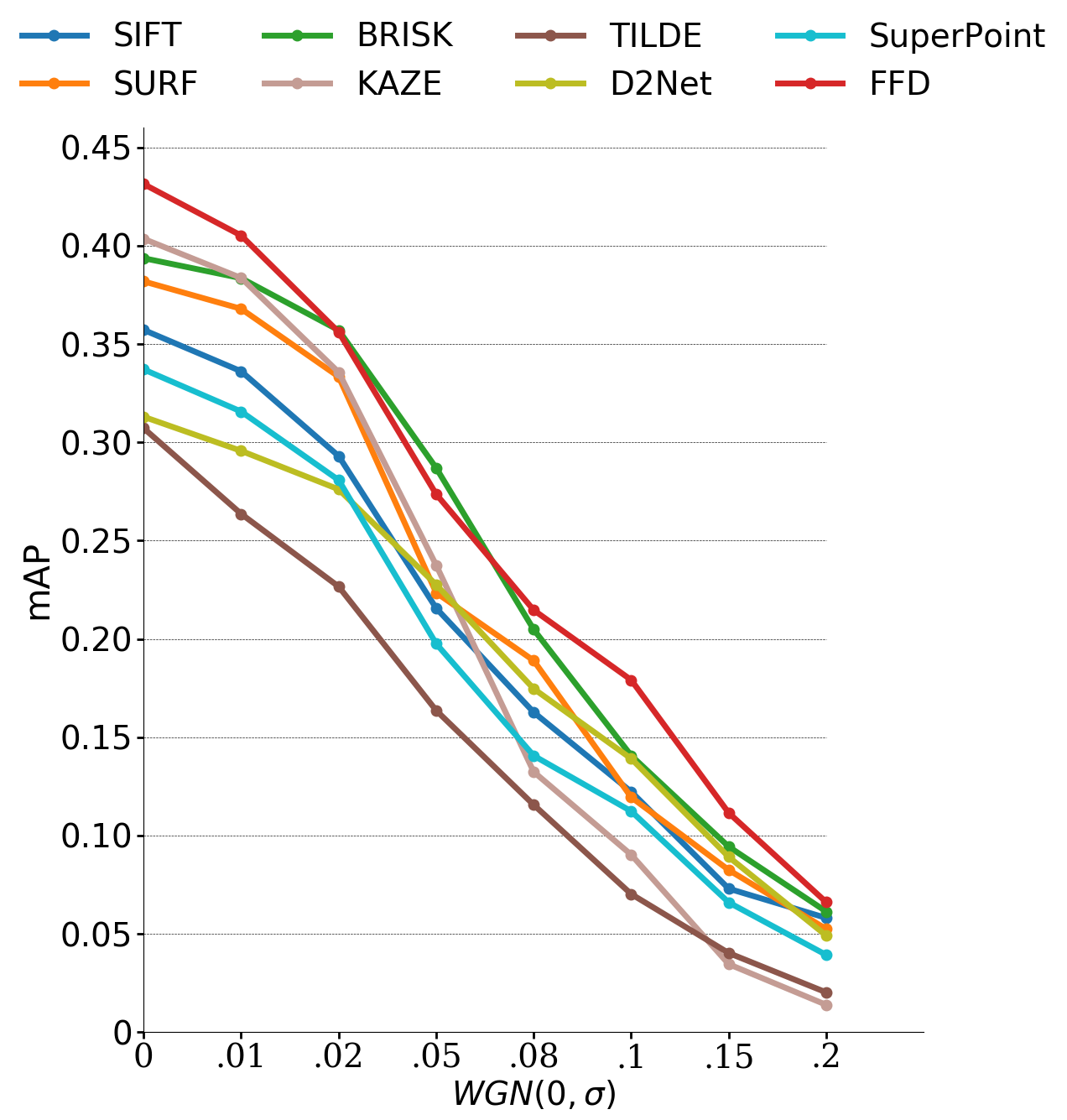

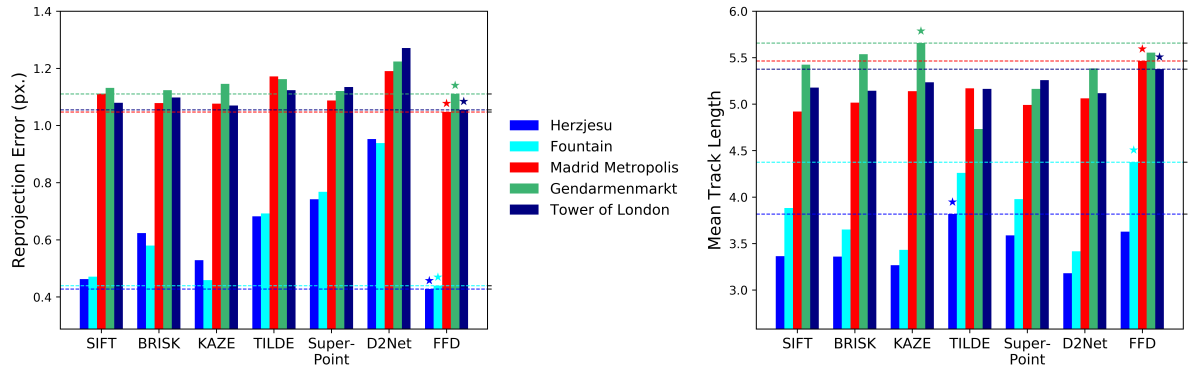

Experimental results and a comparative study with state-of-the-art techniques over several publicly accessible datasets and example applications showed that FFD can detect more highly reliable feature points in the shortest time, which makes it more suitable for real-time applications. A summary of the results is attached below.

3D Reconstruction from Multiview images

Visual Localization

| Feature Detector | (0.5m, ∠ 2) | (1m, ∠ 5) | (5m, ∠ 10) | SIFT | 42.9 | 56.1 | 80.6 |

| SURF | 38.8 | 55.1 | 73.5 |

| BRISK | 39.8 | 59.2 | 77.6 |

| HarrisZ | 41.8 | 57.1 | 75.5 |

| KAZE | 40.6 | 53.0 | 74.4 |

| LIFT | 35.6 | 53.1 | 67.3 |

| DNet | 37.2 | 54.1 | 68.4 |

| TILDE | 38.8 | 54.1 | 69.4 |

| TCDET | 39.8 | 55.1 | 72.5 |

| SuperPoint | 40.8 | 59.2 | 78.6 |

| D2Net | 40.8 | 56.1 | 75.5 |

| FFD | 44.9 | 60.2 | 81.6 |

Robustness